Your AI Chief of Staff

How Personal Assistants Became Our Second Brains

Vignette.

Elise is an independent consultant who dreads juggling logistics — flights, meeting times, invoices, kids’ schedules. Over six months she’s quietly delegated most of that to her AI assistant. Now she no longer opens a dozen different apps each morning. Her “assistant” triages her inbox, suggests schedule shifts, flags conflicting plans between work and family, and proposes travel itineraries — all in one conversational surface.

She still oversees things, but the burden of coordination has lifted.

The Invisible “Chief of Staff”

We are entering an age in which personal AI assistants quietly become a “second brain” — the backstage orchestration layer that keeps everything running. Just as a chief of staff coordinates across a C-suite, your AI agent manages logistics, reminders, priorities, and syncing across domains — but invisibly and adaptively.

People are overwhelmed by information and logistics. Yet AI is becoming the quiet backbone of productivity — handling what used to drain mental energy: inbox triage, travel planning, text replies, and scheduling. The goal is not just speed, but mental relief.

The Rise of a Backstage Assistant

Voice, context, and proactive orchestration

Modern assistants in 2025 - from Apple Intelligence to Microsoft Copilot and Meta’s AI - have evolved beyond passive voice commands. They now predict, plan, and act across contexts:

Voice assistants are proactive, not reactive - suggesting calendar fixes, surfacing trip delays, and aggregating updates.

Email and text automation can now triage, summarize, and draft, helping users move from inbox chaos to prioritized clarity.

Travel planning tools let users issue natural-language instructions like “Get me to Chicago next Tuesday with minimal layovers,” and the AI handles booking, coordination, and confirmation syncs.

At CES 2025, nearly every major platform showcased assistants built to coordinate across devices and ecosystems, turning isolated tools into one cohesive personal system.1

Key Tensions in the New “Second Brain” Economy

Fragmented ecosystems.

Each tool - calendar, email, task manager, travel - remains its own silo. True orchestration requires consistent APIs, which frequently change or break.

Privacy and data sovereignty.

Seamless orchestration demands broad permissions. But full access to your location, inbox, and contacts raises privacy flags. In a 2025 UCL report, browser-integrated AI’s were found to continue collecting context data even when users switched to private modes.2 Similarly, TechCrunch warned that AI platforms are increasingly “normalizing full-access requests” for basic functions like summarizing mail.3 Tech Policy Press calls this “consent erosion by design.”4

Over-reliance and manipulation.

As these systems gain autonomy, they might also influence, or subtly manipulate, user behavior. Wired has referred to them as potential “manipulation engines.”5

Cold start learning curve.

Every assistant needs time to understand your habits and context. Early on, that means missed nuances or wrong assumptions.

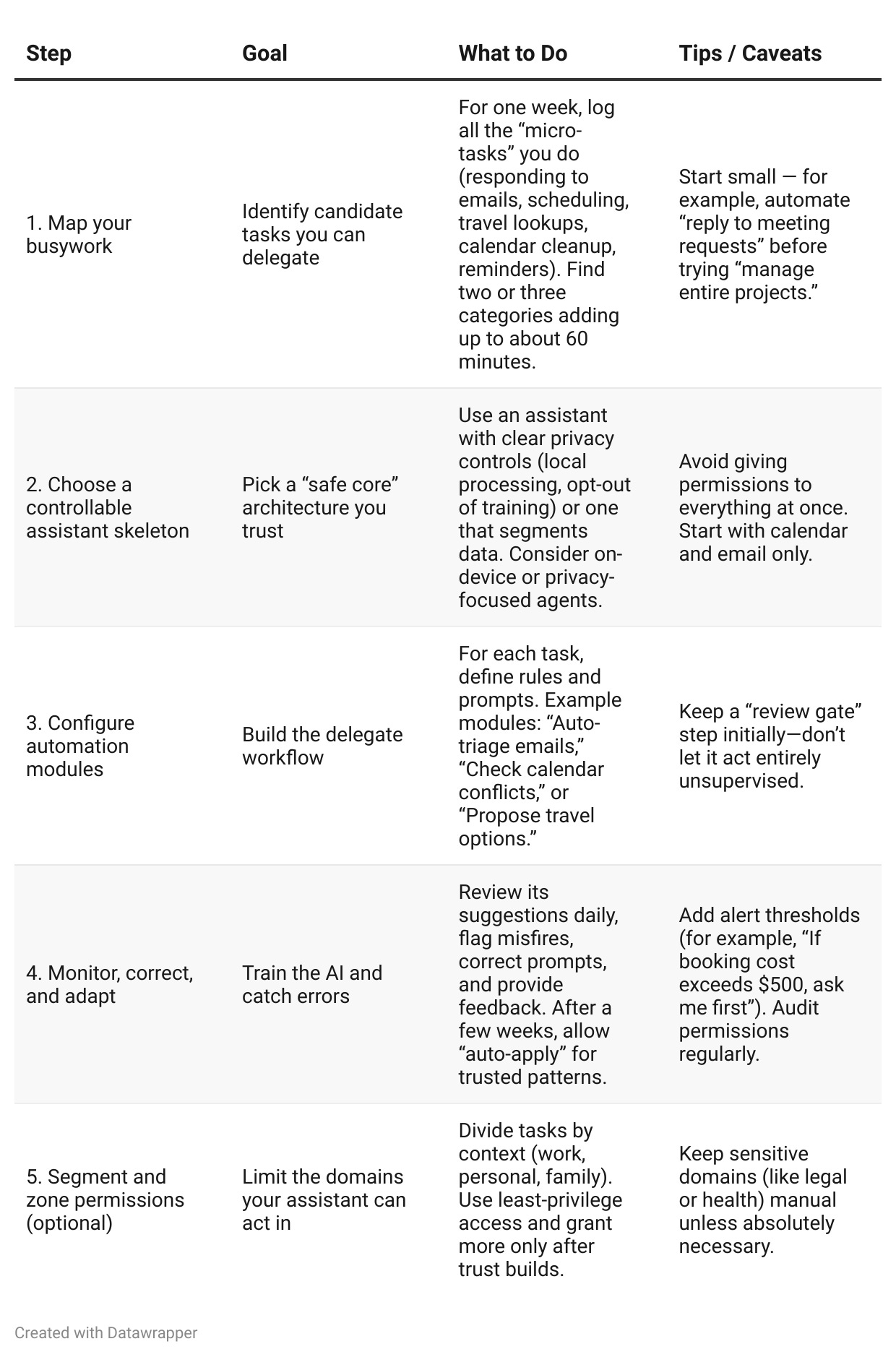

Playbook: Offload 1 Hour/Day to Your Personal AI Chief of Staff

Here are five steps readers can take to gradually vest a personal AI to absorb one hour of busywork per day.

Why It Matters

The real benefit isn’t just speed. It’s cognitive bandwidth. When AI handles the coordination layer, you reclaim mental space for deeper work, creativity, and presence.

Cognitive bandwidth regained. The biggest gains come from automating dozens of small coordination tasks.

Composability. Your AI becomes the glue across tools, not another tool you must manage.

Scaled personalization. As context builds, the AI begins offering insights across domains: travel synced to family schedules, meeting blocks optimized for energy peaks, etc.

This is the essence of the metaphor: the Personal Chief of Staff - invisible, anticipatory, and relentlessly practical.

Guardrails for an Ethical Assistant

Audit permissions monthly. Remove what’s not essential.

Keep human veto power. Never allow unsupervised high-impact actions.

Use local or encrypted options. Whenever possible, process data on-device.

Be alert to bias. Notice if recommendations subtly favor specific vendors or brands.

Maintain fallback independence. Don’t lock into a single ecosystem — diversify.

Prepare for API fragility. Expect automations to occasionally break and rebuild them.

The healthiest assistants are those that empower, not enclose, your attention.

Looking Ahead: The 2025–2026 Trajectory

Memory and context layering. Long-term contextual “memory graphs” are emerging — assistants that recall your preferences, phrasing, and daily rhythms across months.

Cross-agent orchestration. We’ll soon see specialized micro-agents — for travel, wellness, or scheduling — working under a unified meta-agent.

Privacy-first frameworks. The GOD model (Guardrails, On-device training, Differential privacy) illustrates a future where your AI learns without leaking personal data.6

Agent marketplaces. Similar to app stores, assistants will soon have verified “skills” with compliance and audit standards.

Policy and regulation. Expect 2025–2026 frameworks mandating transparency in data use and opt-in consent for long-term memory storage.7

If you start now mapping tasks, training your agent, and guarding your data, your AI “chief of staff” will mature alongside the next generation of orchestration tools.

Footnotes

TrendMicro, CES 2025: A Comprehensive Look at AI Digital Assistants and Their Security Risks (2025).

University College London, AI Web Browser Assistants Raise Serious Privacy Concerns (August 2025).

TechCrunch, For Privacy and Security, Think Twice Before Granting AI Access to Your Personal Data (July 2025).

Tech Policy Press, The Privacy Challenges of Emerging Personalized AI Services (2025).

Wired Magazine, AI Agents: From Personal Assistants to Manipulation Engines (2025).

ArXiv Preprint 2502.18527, The GOD Model: Privacy-Preserving Personal AI Architectures (2025).

University College London, AI Web Browser Assistants Raise Serious Privacy Concerns (August 2025).